Shout-out to my brother, Ivan, for the idea for this post.

There’s a popular custom survival world in Minecraft named Skyblock, in which the world places you atop a small island in the sky, with only some specific items (contained within a chest) and a single tree:

In general, the idea is for this single tree to indirectly provide the player with all the wood they could need for a playthrough, as players can harvest the tree’s materials, plant the saplings dropped from that tree, wait for the saplings to grow to a full tree, and then repeat.

A planted sapling. Image Source

Every sapling is guaranteed to grow to a full tree, but not every full tree is guaranteed to drop a sapling. Hence, the player could run out of trees at some point during the playthrough — the most obvious example is if the first tree yields no saplings.

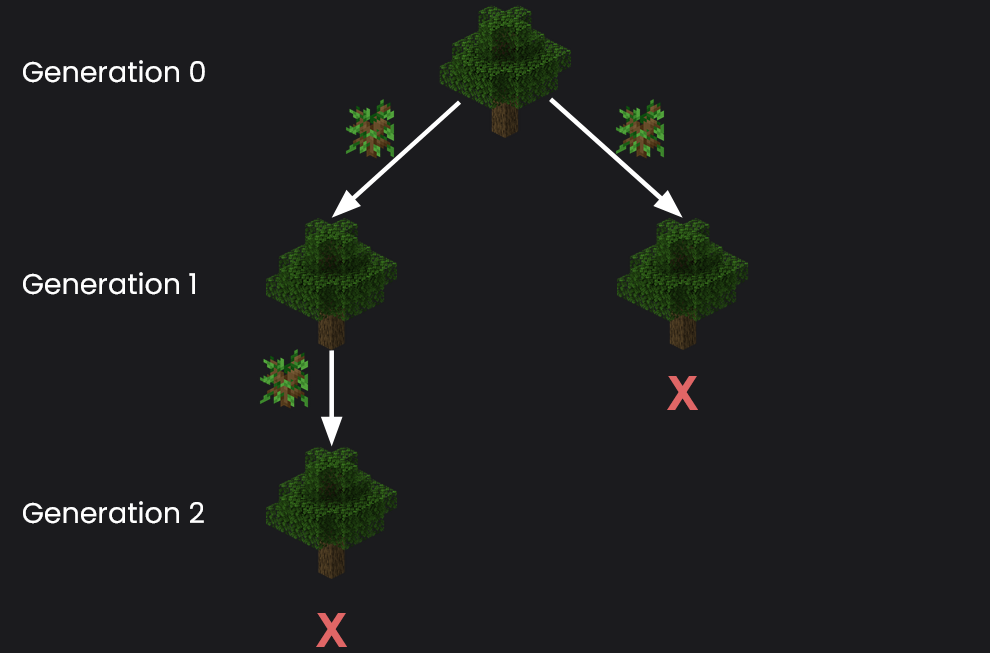

Another example is a scenario where the first tree yields two saplings, and then only one of the two trees grown from those saplings yields another sapling, which then yields none. Represented graphically, this looks like:

Theoretically, there are an infinite number of potential cases, as an arbitrarily large number of trees could be planted before every existing tree fails to yield a sapling. Hence, determining the probability of the player running out of trees is an interesting problem, and I’d like to share my approach.

First, let’s formally run through some notation and terminology that I’ll use hereon.

Let us define evaluating a tree as the process of taking a tree, breaking all of its leaf blocks, and counting how many saplings drop. For a tree \(t\), the notation \(t \rightarrow X\) means that \(t\) evaluated to \(X\) saplings, i.e. tree \(t\) directly produced \(X\) saplings.

Let us define ultimately evaluating a tree as the process of taking a tree, breaking all of its leaf blocks, regrowing all dropped saplings, and repeating until no more evaluation is available, counting all saplings present at the very end. For a tree \(t\), the notation \(t \rightsquigarrow X\) means that \(t\) ultimately evaluated to \(X\) saplings. Two things to note:

- The process of ultimately evaluating could continue indefinitely (for instance, if every tree happens to drops \(1\) sapling).

- All non-zero values of \(X\) are impossible to consider, as otherwise, we would be able to plant those \(X\) saplings, growing \(X\) trees, and then continue evaluating those new \(X\) trees.

Our overall goal is to find \(P(t \rightsquigarrow 0)\).

Finally, let us define a generation \(G_{k}\) as the trees produced by all trees in the previous generation, \(G_{k-1}\). That is, \(G_{k}\) can be determined by evaluating every tree in \(G_{k-1}\). The initial generation, \(G_{0}\), is comprised of the starting trees, which in Skyblock’s case, is just one. For an intuitive representation of generations, please refer to the diagram above.

To emphasise the difference between evaluating and ultimately evaluating, I’ll remark that the following holds: \[ P(t \rightsquigarrow 0) \neq P(t \rightarrow 0) \] \(P(t \rightsquigarrow 0)\) considers evaluating \(t\) and all of its subsequent possible generations, while \(P(t \rightarrow 0)\) considers only evaluating \(t\).

Intuitively, one can also see that the RHS is a strict lower-bound of the LHS, i.e.: \[ P(t \rightsquigarrow 0) > P(t \rightarrow 0) \] For instance, for \(t \rightsquigarrow 0\) to occur, it is not necessary (but is sufficient) that \(t \rightarrow 0\) occurs; however, we can also have additional cases like the one described previously.

Let us first tackle determining \(P(t \rightarrow X)\). We’ll work under the assumption that every tree has exactly \(L\)-many leaf blocks, where \(L\) is some positive integer, and we will denote the probability of a leaf block \(l\) dropping a sapling as \[ P(l = 1) \] As a leaf block dropping a sapling (or not dropping one) is a binary event, we have a binomial distribution, with the other possibility being \[ P(l = 0) = 1 - P(l = 1) \] Therefore, in general: \[ P(t \rightarrow X) = {L \choose X} \cdot P(l = 1)^{X} \cdot P(l = 0)^{L - X} \tag{1} \] Note: We also take the assumption that all leaf blocks (and trees) are independent to one another.

Now that we have an expression for \(P(t \rightarrow X)\), let’s look towards finding an expression for \(P(t \rightsquigarrow 0)\).

Let us rephrase \(P(t \rightsquigarrow 0)\) as the probability of the union of all possible events that could result in \(t \rightsquigarrow 0\), namely:

- \(t \rightarrow 0\)

- \(t \rightarrow 1\) and the tree produced ultimately evaluates to \(0\) trees.

- \(t \rightarrow 2\) and the two trees produced ultimately evaluates to \(0\) trees.

- \(\ldots\)

- \(t \rightarrow L\) and the \(L\) trees produced ultimately evaluates to \(0\) trees.

As each of these events are mutually exclusive (\(t\) can only produce a single number of saplings), the probability of their union is found by summing the probability of each individual case.

Let’s look at the first few cases.

Case 1: \(\enspace t \rightarrow 0\)

- In this case, \(t\) produces \(0\) saplings, so immediately, no more evaluation can occur. Thus, the probability of ultimately evaluating the result of this case and getting \(0\) saplings is \(100\%\), i.e. \(1\).

- Hence, the probability of this case occurring and then the result ultimately evaluating to \(0\) saplings is \(P(t \rightarrow 0) \cdot 1\)

Case 2: \(\enspace t \rightarrow 1\)

- In this case, \(t\) produces exactly \(1\) tree, \(t_{1}\). Thus, the probability of ultimately evaluating the result of this case and getting \(0\) saplings is \(P(t_{1} \rightsquigarrow 0)\). As trees are identical to one another (\(L\) is the same across trees), this can be rewritten as \(P(t \rightsquigarrow 0)\).

- Hence, the probability of this case occurring and then the result ultimately evaluating to \(0\) saplings is \(P(t \rightarrow 1) \cdot P(t \rightsquigarrow 0)\)

Case 3: \(\enspace t \rightarrow 2\)

- In this case, \(t\) produces exactly two trees \(t_{1}\) and \(t_{2}\). Thus, the probability of ultimately evaluating the result of this case and getting \(0\) saplings is the probability that both of these trees ultimately evaluate to \(0\) saplings. As trees are independent, this probability is \[ P(t_{1} \rightsquigarrow 0) \cdot P(t_{2} \rightsquigarrow 0) \] Then, as trees are identical, this is equivalent to \[ P(t \rightsquigarrow 0)^{2} \]

- Hence, the probability of this case occurring and then the result ultimately evaluating to \(0\) saplings is \(P(t \rightarrow 2) \cdot P(t \rightsquigarrow 0)^{2}\)

So, in general, for any case \(t \rightarrow X\), the probability that the case occurs and that the result of that case ultimately evaluates to \(0\) saplings is \[ P(t \rightarrow X) \cdot P(t \rightsquigarrow 0)^{X} \] We now have enough to give a useful equation for \(P(t \rightsquigarrow 0)\); as mentioned above, we sum the probabilities of each individual case: \[ P(t \rightsquigarrow 0) = \sum_{X = 0}^{L}\left( P(t \rightarrow X) \cdot P(t \rightsquigarrow 0)^{X} \right) \tag{2} \]

Our goal is to now solve Equation (2) for \(P(t \rightsquigarrow 0)\). However, observe that it is present on both sides of the equation.

If we expand the RHS of the equation, we can see that it is a polynomial in \(P(t \rightsquigarrow 0)\) of degree \(L\), with each coefficient being of the form \(P(t \rightarrow X)\). \[ \begin{align} P(t \rightsquigarrow 0) = & \enspace P(t \rightarrow 0) \cdot P(t \rightsquigarrow 0)^{0} \enspace+ \newline & \enspace P(t \rightarrow 1) \cdot P(t \rightsquigarrow 0)^{1} \enspace+ \newline & \enspace P(t \rightarrow 2) \cdot P(t \rightsquigarrow 0)^{2} \enspace+ \newline & \enspace \ldots \enspace+ \newline & \enspace P(t \rightarrow L) \cdot P(t \rightsquigarrow 0)^{L} \end{align} \] Solving such a polynomial to find an exact solution looked unfruitful, however, the equation looks as though fixed-point iteration can be applied; observe that the equation is of the form \(c = f(c)\), where \[ \begin{align} f(x) = & \enspace P(t \rightarrow 0) \cdot x^{0} \enspace+ \newline & \enspace P(t \rightarrow 1) \cdot x^{1} \enspace+ \newline & \enspace P(t \rightarrow 2) \cdot x^{2} \enspace+ \newline & \enspace \ldots \enspace+ \newline & \enspace P(t \rightarrow L) \cdot x^{L} \end{align} \] In other words, we repeatedly compute \[ c_{k} = f(c_{k-1}) \] and as \(k \rightarrow \infty\), \(c_{k}\) should become more and more accurate, giving us a very reasonable approximation of \(P(t \rightsquigarrow 0)\).

To determine the exact coefficients of the polynomial, I first chose some of the base values, namely \(L = 50\) and \(P(l = 1) = 0.05\), based upon the ‘real-(Minecraft-)world’ values found in the Minecraft Wiki (here and here), and then applied Equation 1.

To perform all of this computation, I wrote the following Python script:

from decimal import Decimal from math import comb NUM_OF_LEAVES = 50 PROB_SAPLING_FROM_LEAF = Decimal("0.05") def f(c_k: Decimal) -> Decimal: total = Decimal("0.0") for x in range(0, NUM_OF_LEAVES + 1): total += ( (c_k ** x) * # Equation (1) comb(NUM_OF_LEAVES, x) * ((1 - PROB_SAPLING_FROM_LEAF) ** (NUM_OF_LEAVES - x)) * (PROB_SAPLING_FROM_LEAF ** x) ) return total

I set \(c_{0}\) as \(P(t \rightarrow 0)\), seeing as we previously found it to be a lower-bound. Results of repeatedly executing the function (up to \(k = 10\)) are shown below:

| \(k\) | \(c_{k}\enspace\) (truncated to 8d.p.) |

|---|---|

| \(0\) | \(0.07694497\) |

| \(1\) | \(0.09417628\) |

| \(2\) | \(0.09852503\) |

| \(3\) | \(0.09965328\) |

| \(4\) | \(0.09994806\) |

| \(5\) | \(0.10002522\) |

| \(6\) | \(0.10004543\) |

| \(7\) | \(0.10005072\) |

| \(8\) | \(0.10005210\) |

| \(9\) | \(0.10005247\) |

| \(10\) | \(0.10005256\) |

As can be seen, \(c_{k}\) is converging towards \(0.100\) (3s.f.). Hence, we can conclude that \[ P(t \rightsquigarrow 0) \approx 10\% \] In other words, the probability of ultimately reaching no trees in Skyblock is approximately 10%.

To gain confidence in our ‘theory-heavy’ approach of finding \(P(t \rightsquigarrow 0)\), we can experimentally

regrow trees and see whether the experimentally-found probability aligns with the theoretical one; instead of literally

using a Minecraft world, I’ve written the following C++ code to simulate the process:

#include <random> #include <time.h> const int NUM_OF_LEAVES = 50; // Set-up the RNG std::random_device rand_dev; std::mt19937 generator(rand_dev()); std::uniform_real_distribution<> distr; int expand_tree() { int saplings_dropped = 0; for (int _ = 0; _ < NUM_OF_LEAVES; _++) { if (distr(generator) < 0.05) { saplings_dropped += 1; } } return saplings_dropped; } int experiment(int max_generation) { int trees = 1; int current_generation = 0; while (current_generation != max_generation) { int new_trees = 0; for (int _ = 0; _ < trees; _++) { new_trees += expand_tree(); } trees = new_trees; current_generation += 1; if (trees == 0) { break; } } return trees == 0; }

Note that this script is not particularly optimised, but I hope it is intuitive to read; expand_tree simulates

taking a tree and breaking all of its leaf blocks, with the returned integer representing the number of

saplings dropped. experiment simulates the ultimate evaluation of a single tree, but stops processing after

reaching a particular generation (max_generation).

If we find the probability of ultimately evaluating to \(0\) saplings within \(k\) generations, then as

\(k \rightarrow \infty\), we expect the experimentally-found

probability to approach \(P(t \rightsquigarrow X)\), i.e. approximately \(0.100\) for our chosen \(L\) and

\(P(l = 0)\). Using the following code, we can collect our results (varying MAX_GENERATION):

int main() { // Seed the RNG srand(time(NULL)); // Run an instance (of ultimately evaluating a tree) 1,000,000 times const int EXPERIMENT_COUNT = 1000000; // Vary the maximum number of generations from 1 to 8 for (int i = 1; i < 8; ++i) { int true_results = 0; for (int _ = 0; _ < EXPERIMENT_COUNT; _++) { if (experiment(i)) { true_results += 1; } } printf( "Probability of ultimately evaluating to 0 saplings within " "%d generation(s): %f%%\n", i, (double) true_results / EXPERIMENT_COUNT ); } }

| Maximum Generation | Probability (truncated to 8d.p.) |

|---|---|

| \(1\) | \(0.07690100\) |

| \(2\) | \(0.09412200\) |

| \(3\) | \(0.09814300\) |

| \(4\) | \(0.09963900\) |

| \(5\) | \(0.09977700\) |

| \(6\) | \(0.10002400\) |

| \(7\) | \(0.10003700\) |

| \(8\) | \(0.10072700\) |

As you can see, the experimental-probability appears to be getting very close to our theoretically-found value (i.e. approximately \(0.100\)). Hence, I believe that we can conclude that the theoretical result (and subsequent approximation) is accurate.